Pourang Irani (right) with students inspecting a spatio-temporal dataset on a large screen display.

The human architect

Since the dawn of time, humans have navigated the world through our five senses. Using pervasive technologies already at our disposal, computer scientist Pourang Irani is developing a sixth—one that he believes will make us “truly human.”

True to its name, ubiquitous computing and its devices are everywhere—our phones, appliances, vehicles—gathering data about us and our environment.

As the presence of computing has evolved from the mainframe, to the desktop computer, then to mobile devices and the Internet of Things, it has created a need for people to access information anywhere and at any time.

As a Tier 2 Canada Research Chair in Ubiquitous Analytics funded by the Natural Sciences and Engineering Research Council of Canada, Irani is focused on enhancing our human capabilities by providing data in ways that will help us make better decisions and navigate our day-to-day.

“We say we’re a very data-intensive society, but we are really only scratching the surface because we don’t have the tools to actually be able to look at that information and get it when we need it to be able to change something. Theoretically, it can be collected; but in reality, it’s a far cry.”

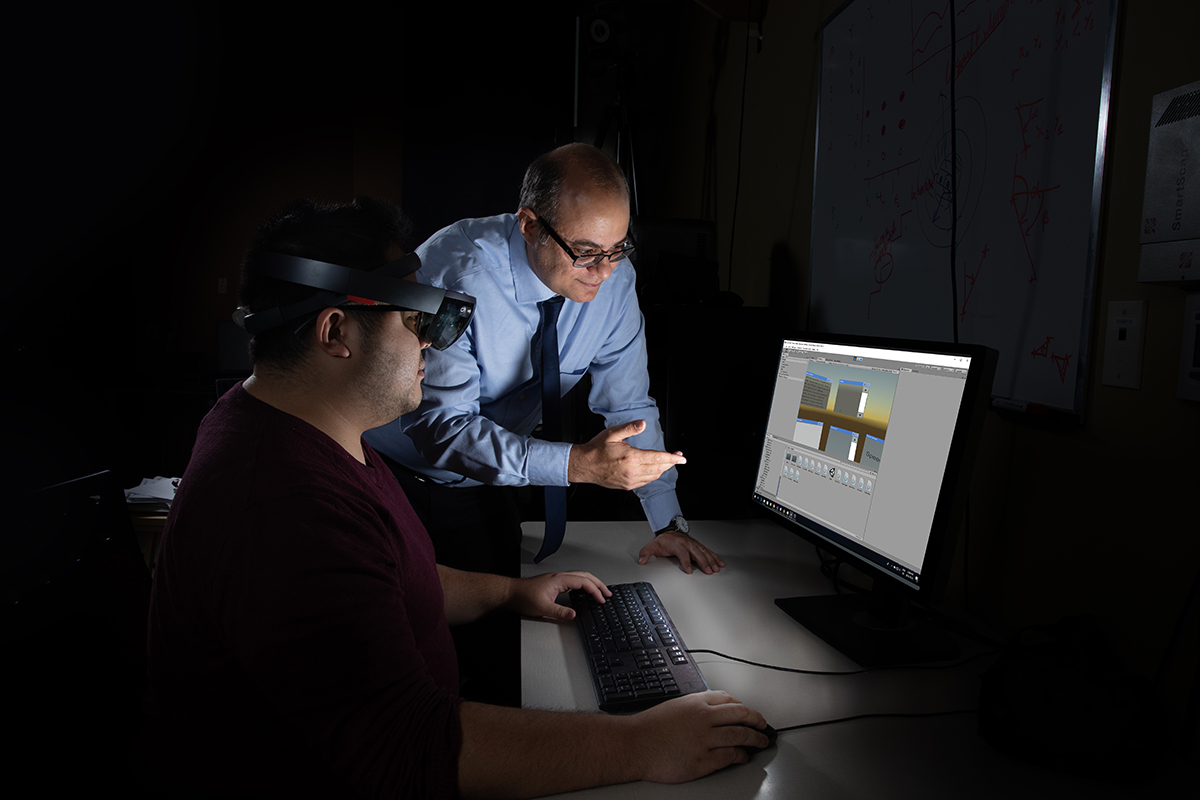

Pourang Irani (right) with MSc student Kenny Hong working on augmented reality/ virtual reality training tools for neo-natal resuscitation.

Irani’s goal is to augment devices so they can provide data that will help us in every aspect of our lives—from the practical to the consequential. His lab is currently in the middle of a multi-year project looking at how to summarize video content, then sync it with a person’s tasks for situations that require following instructions—like baking a cake, or performing CPR.

A lengthy how-to video would be pared down to shorter clips that isolate each step in the process and are activated by the user’s activity. Once your device recognizes you’ve called 911, only then would it play the video for delivering chest compressions. Irani’s lab has already leveraged omni-directional cameras on mobiles, called SurroundSee, that gives smartphones peripheral vision to detect objects and user activity that “only comes in play when we’re in the environment and in the context of that task.”

Given the nature of his work, Irani is often mistaken for a “techy guy”. Yet while the Human-Computer Interaction Lab is filled with electronics, his office is anchored by a wall of books. There’s an old Moleskine notebook on his desk, along with a smartphone—his first and only—which he purchased two years ago.

He wanted to study architecture at university, but the program was full so he chose computer science. Now, he observes people instead of buildings for clues to his next project. It’s how CrashAlert came about: by watching hapless attempts to walk and text. The app, developed with post-doctoral researcher Juan David Hincapié-Ramos, uses a distance-sensing camera to scan the path ahead and alert users to approaching obstacles.

“It’s not something I want to encourage,” Irani explains, “but the reality is we need knowledge as humans and we’re thirsty for having a social life. Any tool that will get us closer to that will be used in every single way possible. Humans are very good at adapting tools for their own uses. The person who came up with the smartphone didn’t expect people to walk and type. If they had, their interfaces would have looked different.”

This is why Irani wants to know how his prototypes will fare in human hands. For that, he needs to bring his technology outside the lab and into people’s everyday lives. The support he has received through his CRC appointment will allow him to conduct such longitudinal field studies.

“It’s not enough to simply look at the technical aspects, but also the social aspects and how that plays out with our interaction with one another. If it does have a negative impact, then let’s find a way to mitigate that before the technology is actually deployed. Let’s become a bit more aware of our actions, our thoughts, and our interactions with other people and the environment. I think by doing that you can resolve lots of issues in our society.”

Upgrading

Pourang Irani and his team of graduate students are enhancing the technologies we interact with on a daily basis. His recently launched NSERC CREATE (Collaborative Research and Training Experience) program, designed to train graduate students in Visual and Automated Disease Analytics. Other projects, funded by NSERC include the following:

SPATIAL ANALYTICS INTERFACES

Smartphones give us access to a wealth of information, but the actual window we have to view the data is limited. While screens have increased in size to combat this problem, Irani and Barrett Ens have designed wearable user interfaces, on smart glasses, that project interactive digital screens onto a person’s physical environment, expanding the amount of viewing space available.

AROUND-DEVICE INPUT

Advances in motion sensing technology have allowed researchers to explore how we can interact with mobile devices beyond their physical space.

Irani and Khalad Hasan have worked on expanding the capability of devices to sense all around them, turning common tables into interactive surfaces, or recognizing mid-air thumb gestures to make it easier to use smartphones one-handedly.

DATA VIDEOS

Watch any news story and you’ll likely see journalists use data videos and motion graphics that incorporate visualizations about facts to illustrate stories. The effects are persuasive and compelling.

Irani and Fereshteh Amini deconstructed such videos to understand what components are used and why they are so impactful. Their research, while Amini interned at Microsoft Research, led to a new tool that will help the average person make data videos.

– From the Winter 2019 edition of ResearchLIFE

Research at the University of Manitoba is partially supported by funding from the Government of Canada Research Support Fund.